Interpretation of digital images and health data is a cognitive task that we support with advanced software and automate where possible using AI methods. Machine learning has always played an important role here, because it allows us to optimize algorithms given suitable training data. In recent years, deep learning has been on the rise, which allows "end-to-end training" of a larger part of the processing pipeline, achieving much better results as long as enough training data is available.

Deep learning does not replace all existing algorithms, however – it is an extremely valuable new tool in our toolbox. We use deep neural networks for automated contouring of structures, for faster image registration, for extracting information from text, classifying pathologies and much more. In order to build complete clinical solutions with a good user experience, we combine deep learning and traditional processing algorithms, workflow tools and task-specific visualizations. Deep learning for image analysis also plays together well with image registration, which allows to fuse information from different timepoints, or to correct for motion during time series acquisitions, as a preprocessing for improved decision support.

Application Areas

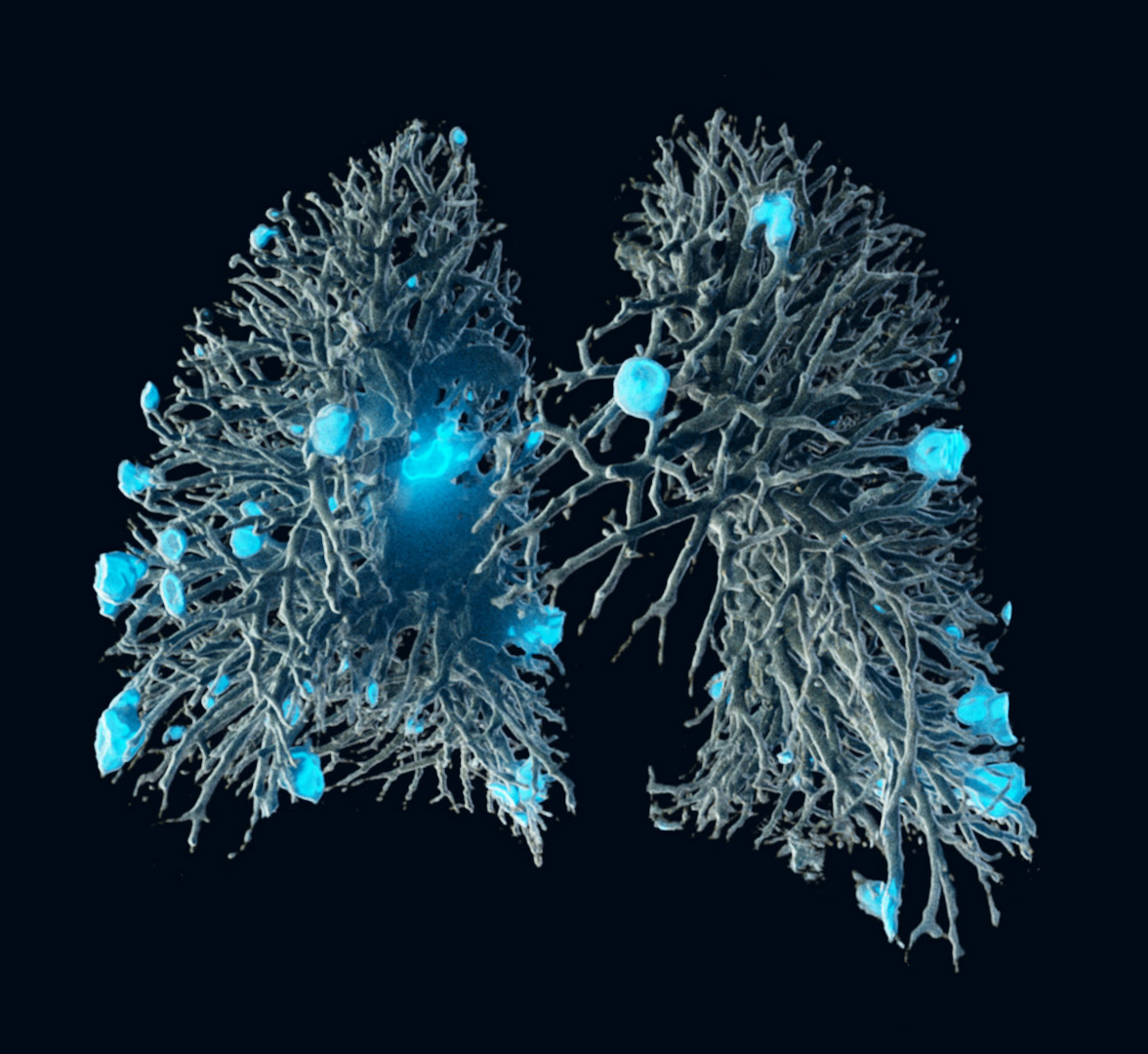

We employ deep neural networks for various tasks, on image and non-image data. Automated contouring (segmentation) is an application that benefits a lot from these new technologies and does not require as many training images as other tasks, so it is a particularly important and successful area. For instance, we use deep learning for segmenting the liver in CT and MR, its vessel systems in contrast-enhanced datasets, and tumors for planning various treatment approaches much faster and with less user interaction. In the lung domain, we segment the lung itself, its lobes, tumors, ground glass opacities, emphysema, and more. Radiotherapy planning requires contouring many risk structures throughout the body, some of which were extremely difficult to segment automatically before the rise of modern neural networks. We make use of deep learning in more medical domains, however – these examples are not complete at all.

Tools and Infrastructure

The challenge in deep learning is that robust, clinically applicable algorithms require training data that is representative of all target scenarios the algorithm should work in. While traditional medical software development also required a representative data collection for testing, modern data-driven approaches need large, multi-centric data collections and appropriate expert annotations.

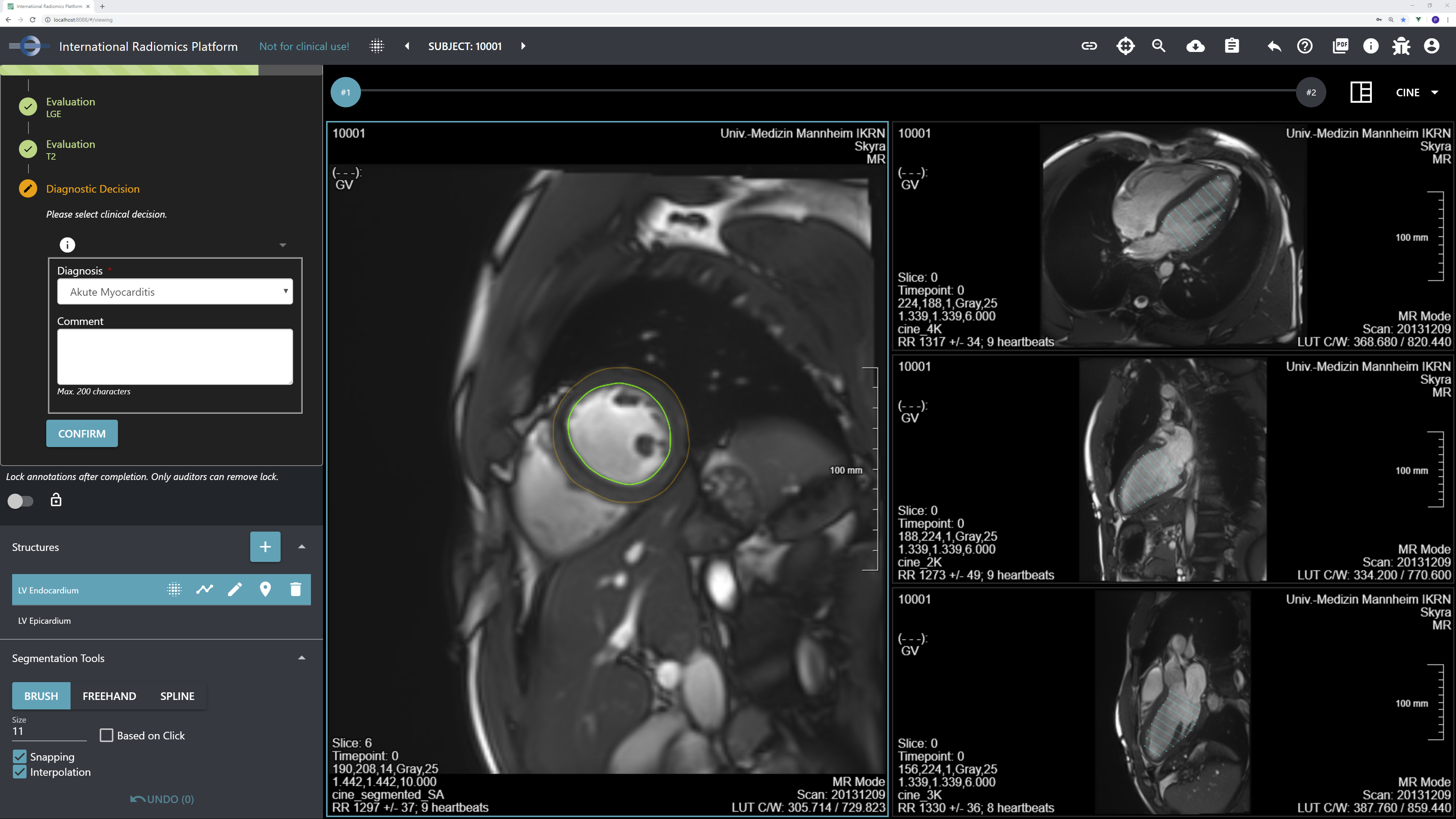

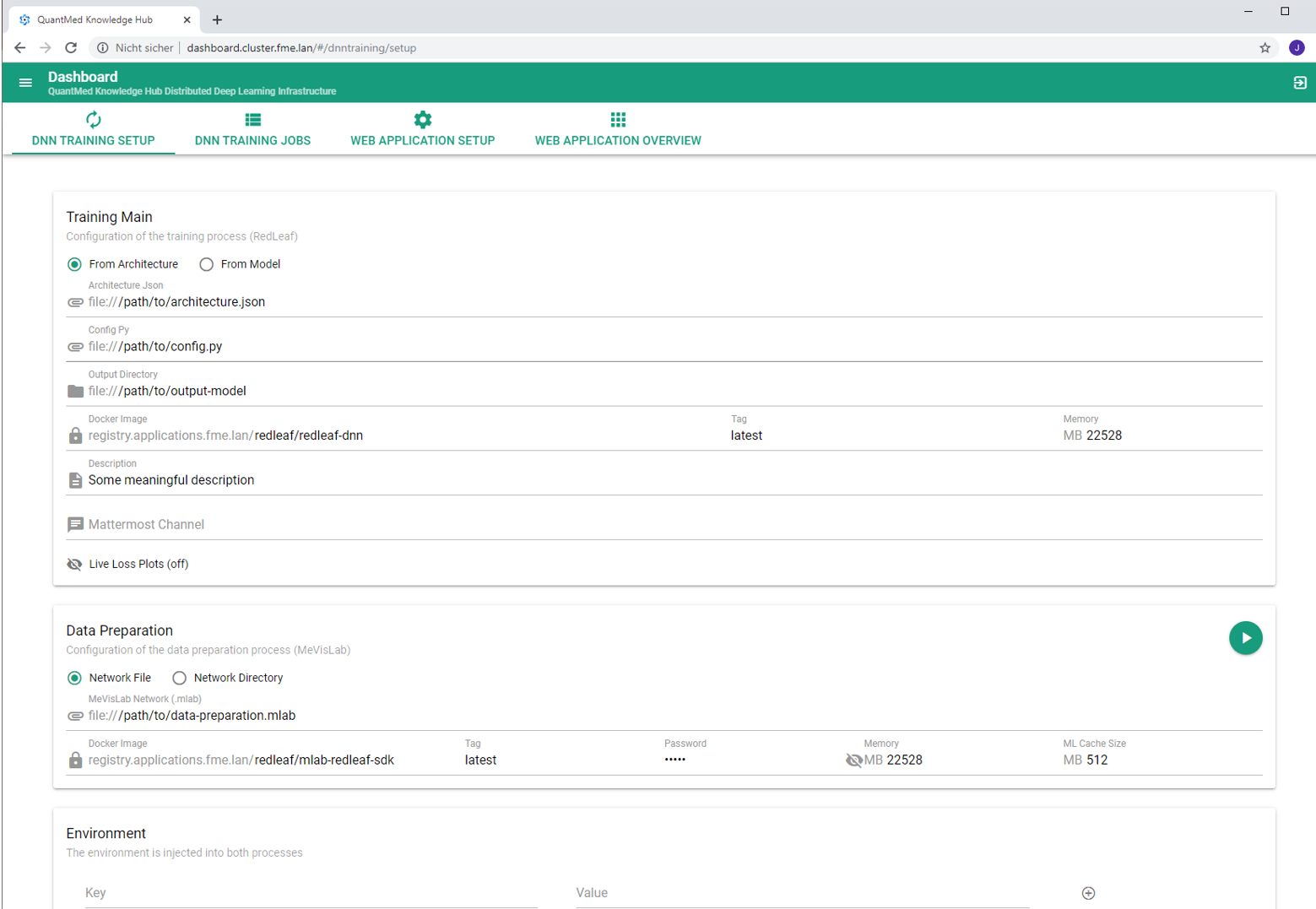

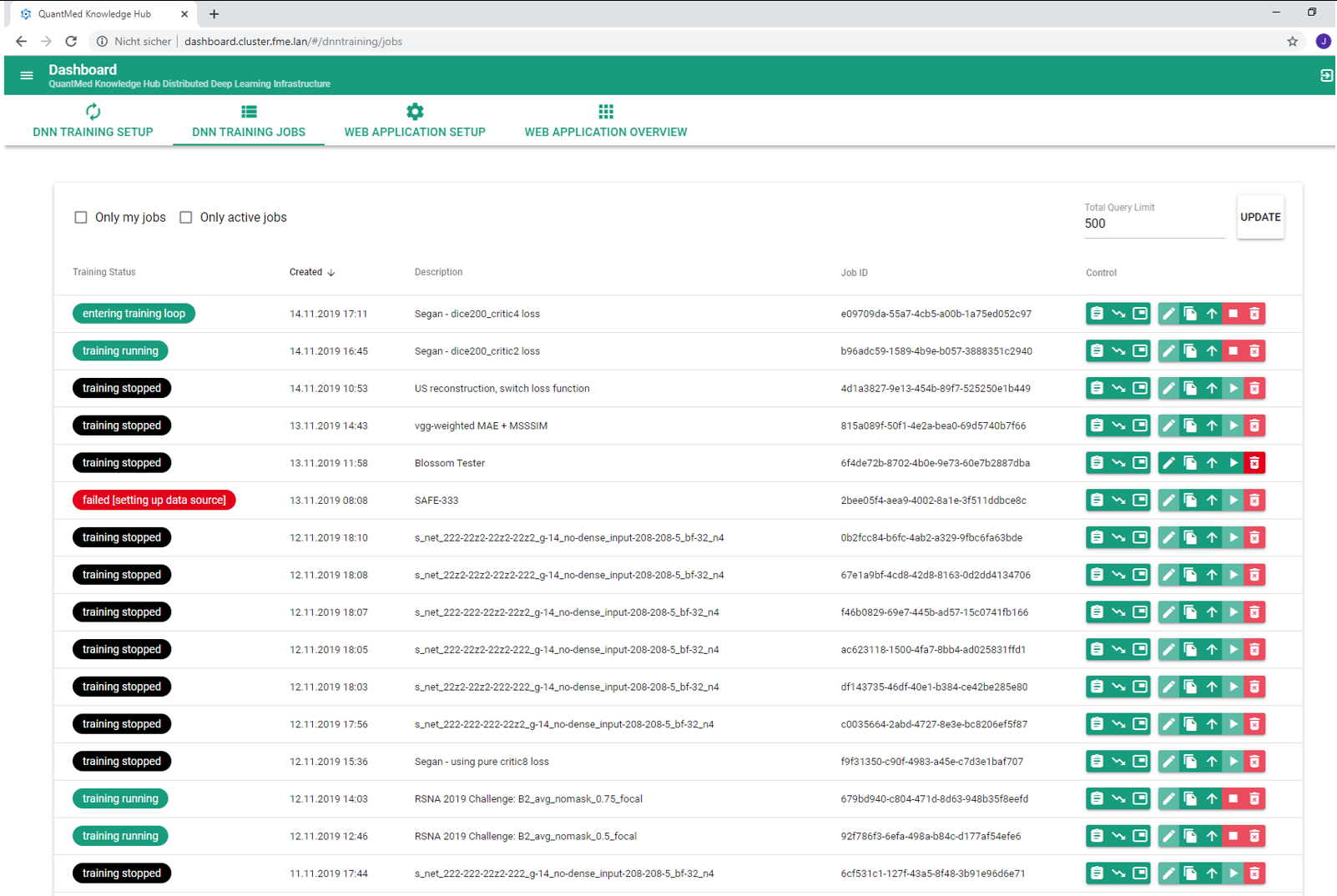

Therefore, we have developed an AI collaboration toolkit that contains infrastructure components and web frontends that support the full collaborative research & development workflow. The scope spans data curation (viewing, filtering, annotating), AI development (model training and evaluation), and final clinical evaluation in application prototypes. The toolkit can be hosted on-site in a hospital, at Fraunhofer MEVIS, or in the cloud, and clinicians and technical experts can log in to perform their tasks.

Let's Work Together

For decades already, we've been developing the MeVisLab rapid prototyping platform and used it to supply application prototypes to clinical collaboration partners. While always striving to increase the degree of automation for efficient data processing, we have also refined the interactive correction tools necessary for practical applicability on all cases. Behind the scenes of our web-based AI collaboration toolkit, we make use of MeVisLab and have access to all our existing algorithms.

We support clinical studies for all medical specialties and for commercial partners like pharmaceutical companies. We make our tools available to partners or use them ourselves to conduct contract research, either in funded project collaborations or in commercial research contracts. We perform or support efficient data curation in-house or on-premise for clinical and commercial partners who envision to train their algorithms with their own infrastructures. Please get in touch to let us understand your needs – we determine the right mode of collaboration together with you and embark on endeavors ranging from feasability studies to quality-assured deliveries of components for medical products.

Fraunhofer Institute for Digital Medicine MEVIS

Fraunhofer Institute for Digital Medicine MEVIS